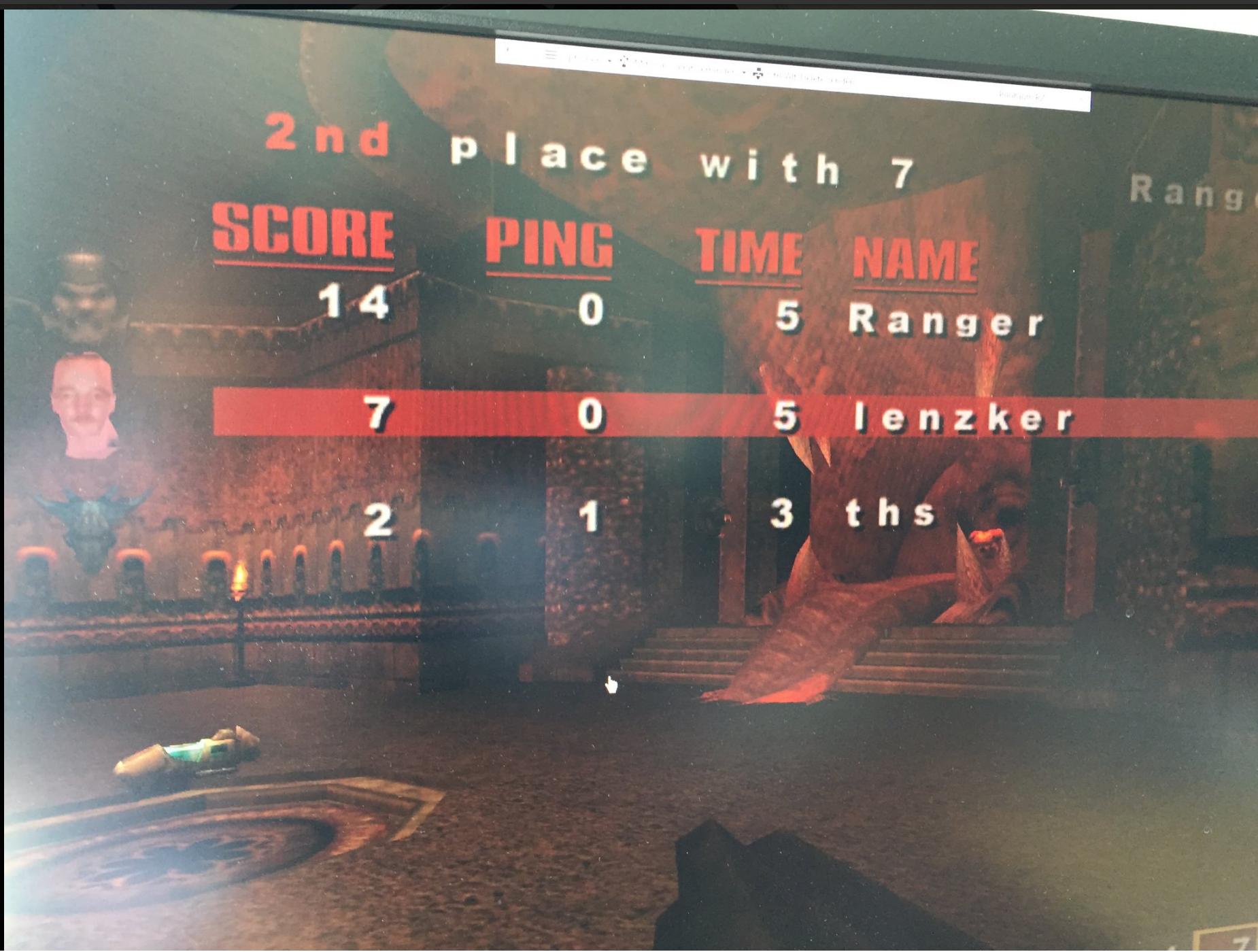

The Usage of Graphical Processing Unit (GPU) within the Virtualization field is getting more and more important. Shaders of the GPU can be used for massive-parallel calculations at the same time and speed up applications tremendously. Within the End-User Computing (EUC) field the GPU plays an important role as well (depending on the use-case). Not just certain 3d applications require the acceleration through the GPU (eg. CAD applications, Quake 3), even daily applications can benefit from it (Browser, Microsoft Office, etc.).

Since vSphere 6 there is not need to hide behind XenServer (on this specific feature) since the hypervisor platform supports vGPU or as it is currently called: Shared Pass-Through graphics.

You assemble the graphics-card which must be compatible with the HCL, install its correct drivers on the ESXi and the virtual machine and voila. Here we go… End-user experience at its best. Combine it with Horizon 7 and voila… Desktop Virtualization is really getting serious.

Unfortunately there are still some limitations when you use the GPU.

Limitations:

- no vMotion: Virtual Machines having a vGPU built in cannot be migrated away via vMotion. This will definitely have an impact on the operational processes (maintenance-mode, patching, etc.)

- no Instant Clones: The cool feature of vSphere 6 and Horizon 7 cannot be combined currently with vGPUs. With instant clones you can rollout hundreds of VDIs within seconds without the dependancy from a component like the composer of linked-clones. Update & Refresh processes of VDIs are taken to a new level with those Instance Clones.

- vSphere 6.0+ & vHW 11 required: vSphere 6.0 was to be honest not the best release regarding its quality (CBT Bugs, etc.). If you are wanting to use vGPU based VMs in your environment, we have a very valid argument for updating to vSphere 6.X.

- compatible Hardware (HCL): The amount of supported devices is quiet limited. At the moment 3 AMD and 4 NVIDIA cards are supported.

- maximum of 64 Desktops per ESXi Host (Grid K1 & 2 cards per Server): At the moment the maximum amount of cards you are allowed to put into your server is two. With the lowest GPU profile on the GRID K1 cards that means you can have a maximum of 64 3d accelerated Desktops per ESXi.

- no Transparent Page Sharing (TPS): Transparent Page Sharing helps us to reduce the amount of consumed memory on the ESXi hosts by doing something similar like deduplication on the memory of the virtual machines. We all know that inter-VM-TPS was disabled several time ago. But still we can re-enable inter-VM TPS and make sure that TPS is working right from the first second by disabling large-pages . TPS can have huge improvements within the VDI field, but as soon as you have a VM with a vGPU inside –> TPS will not share anything.

- no vSphere Console Access: This can have a negative impact on operational processes. As soon as you have a vGPU in the VM you cannot access the VM graphics via the vSphere client console. For some security-considerations this can be valuable for guaranteeing that a vSphere admin has no possibility to capture and record the virtual machine video.

I like the look and feel you can achieve with a well connected & 3d accelerated VDI. But it’s still important to be aware of the current limitations (July 2016).

Make a free & online test-drive with NVIDIA to get a feeling of the of vGPU, know the constraints of using vGPU & make some proper testing within your environment.

Just curious if any of this has changed since vSphere 6.5 and View 7.x…

me too with regards to vmotion