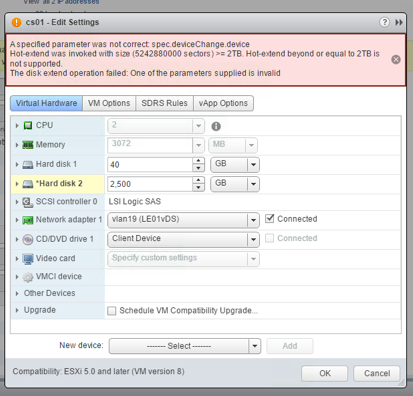

Even though I was more involved in conceptual doing in the last months I was recently asked for help since a customer was not able to update their environment via vSphere Update Manager.

The following blog post explains how we can work deal with the following event / task error messages:

“Could not scan ESXihostname” or “Cannot execute upgrade script on host”

The vSphere Update Manager is nowadays integrated in the vCenter Server Appliance and is pretty suitable for patching and upgrading ESXi hosts. In theory the process is really straight forward.

- Optional: Create a baseline that includes all relevant ESXi components (so called vSphere Infrastructure Bundles [VIB]) that you want add to your ESXi hosts (e.g. Create a static baseline for a specific vSphere build)

- Attach the baseline to a Cluster or an ESXi host object

- Scan the Cluster or ESXi host object against the baseline. In the end we can discover which baseline elements are installed on the ESXi host and which are missing. In case all baseline items exist on the ESXi host the host is declared as comply.

- Remediate the baseline. The ESXi host will be placed into the maintenance mode, the components defined in the baselines will be installed on the ESXi host. The host will reboot and the maintenance mode will be disabled. Afterwards the ESXi host should be comply to the baseline.

That is quite easy. Easy enough that this module takes around 1 hour in the vSphere: Install, Configure & Manage class (from time to time I still deliver VMware Trainings -> contact me if you want to join :).

Read more